Hello from LMArena: The Community Platform for Exploring Frontier AI

At LMArena, everything starts with the community. There have been a lot of new members joining us in the past few months so we thought it would be a good time to reintroduce ourselves!

Created by researchers from UC Berkeley’s SkyLab, LMArena is an open platform where everyone can easily access, explore and interact with the world’s leading AI models. When we launched the first leaderboard two years ago, we weren’t trying to build a company. Instead our interests have always been grounded in research values. From the start, we wanted to know how we might create a rigorous, reproducible, community-led framework for real-world model evaluation.

Since then, the community has helped us evaluate over 400 models across text, vision, coding, and more, casting tens of millions of head-to-head battles that directly shape which AIs rise to the top. Your preferences have already changed how models are trained, which ones get released, and what improvements labs prioritize next.

We believe human preferences are essential to building better AI. They’re subjective, diverse, and complex. Which is why everyone should have the ability to contribute to AI progress.

How LMArena Works

On LMArena, anyone can explore and interact with the world’s leading AI models. The arena is designed like a tournament where models are compared anonymously side by side and users vote for the better response. This structure of anonymous battles, dynamic prompts, and rotating users, was designed to reduce bias and reflect diverse, real-world use cases, making it possible to generate statistically meaningful insights.

With votes, the community helps shape a public leaderboard, making AI progress more transparent, accessible, and grounded in real-world usage. The more people prompt and vote, the more everyone learns together and impacts AI progress. Sometimes you may find two responses to be a tie, or that both responses aren’t up to your standards. You decide. Everyone votes differently, and that’s okay! There’s no pressure to vote if you’re unsure which answer is better. Since there's no payment or external incentive, votes come from intrinsic motivation. That’s what makes this community unique. The votes are high-quality because they're grounded in genuine interest. We have a diverse range of subject-matter experts: people you can’t hire through labeling firms, contributing authentic, thoughtful evaluations on their own prompts.

Over time, these votes in battle mode add up to a public leaderboard that reflects collective, real-world judgment. It’s not based on benchmarks or automated scoring, it’s based on how people actually use AI. In fact, around 70% of prompts each month are fresh, meaning it's impossible for any AI model to predict and plan for what they will be evaluated on, no matter how many variants or evaluations are run.

In addition to Battle mode, you can also explore specific models in Side-by-Side chat or Direct chat. In these modes, you can get deeper with the models you prefer. All prompts and any votes in these modes are collected for transparent research, but do not contribute to any leaderboards as the models are not anonymous. We also never share any personal information, only the prompts and votes are collected for research purposes and may be posted publicly.

How Model Testing Works

How do we bring all these models to the community? Well, we work directly with model providers, from open-source teams to major AI labs, to help them test, compare, and improve their models before and after official release. We’ve been doing this now for over a year, and given our capacity, we’re continuing to do our best to honor all the evaluation requests we receive. We began evaluations in March 2024. Since then, a wide range of models have been tested (we celebrated the community's impact in our 2 year celebration post). Big, well-known labs like Google, Meta, Alibaba and OpenAI are represented, as they are incredibly active in releasing models the community is eager to test. At the same time, thanks to community support and requests, open-source and emerging labs have a platform here too. Over 40% of battles include an open model.

Some of these models are introduced to the community privately, under pseudonyms/codenames, that help companies and developers experiment and learn about what to release publicly. The more pre-release models are tested, the more the community gets to test the AI frontier and impact AI progress.

By offering a shared infrastructure for both pre-release and public testing, LMArena supports a more reproducible and transparent evaluation process across the AI research ecosystem. Every model provider makes different choices about how to use and value human preferences, so some engage with our community more than others. For any model that is ultimately released publicly, scores are released to the public leaderboard.

The rules are simple:

- Only publicly released models with longer-term support get ranked.

- All models are treated the same in evaluation, it’s the community’s votes that decide the outcome.

We only publish scores for models that are publicly available, so the community can access them directly and verify results through their own testing. When providers test on LMArena, they’re learning from your feedback, whether it’s which phrasing sounds more helpful, which coding output you prefer, or what tone feels more human. The LMArena leaderboard is designed to show which AI models people genuinely prefer.

Here more about how it all works in this podcast with our cofounders:

LMArena’s evaluations rely on randomized, large-scale comparisons that provide a more dynamic and statistically grounded picture of model performance. This means that each model is tested with constantly changing prompts and a rotating user base—unlike static benchmarks or internal QA tests that use repeatable conditions. The models can’t memorize a test. Each model just has to be better, in the eyes of the community.

We also retire models which are no longer available to the public to keep the community’s experience relevant and up to date. On LMArena, the whole community gets to explore and shape the frontier of AI as it evolves. It’s incredible how pre-release testing and data can help models optimize for millions of real people’s preferences.

Ensuring a Great Experience for the Community

The community has made it very clear they are here to explore the best and newest AI models. This is why you may notice that some models appear more frequently in battles. That’s because we upsample:

- New models: to collect meaningful data faster for everyone

- Top-voted models: so you get high-quality comparisons more often

This helps the leaderboard converge quickly and ensures that the community can spend time with the most relevant systems you’ve been asking for. As the leaderboard reflects millions of fresh, real human preferences, our goal is to utilize scientific rigor to reflect the voices of the community as accurately as possible.

We Support Open Research

Beyond the leaderboard, we’re actively developing new artifacts and ways for understanding human preference with clarity and precision. Keeping humans in the loop to AI progress is important. Understanding human preference is a critical part of evaluating AI systems designed for human use.

That’s why we also contribute to open research by working on statistical methods—like style and sentiment control. This includes decomposing preference into components like tone, helpfulness, formatting, and emotional resonance, key variables in understanding how AI is perceived and trusted in the wild. When pre-release testing and data helps models optimize for millions of people’s preferences, that’s a positive thing! So far, 9 papers and 15 blog posts on evaluation and human preference have been published.

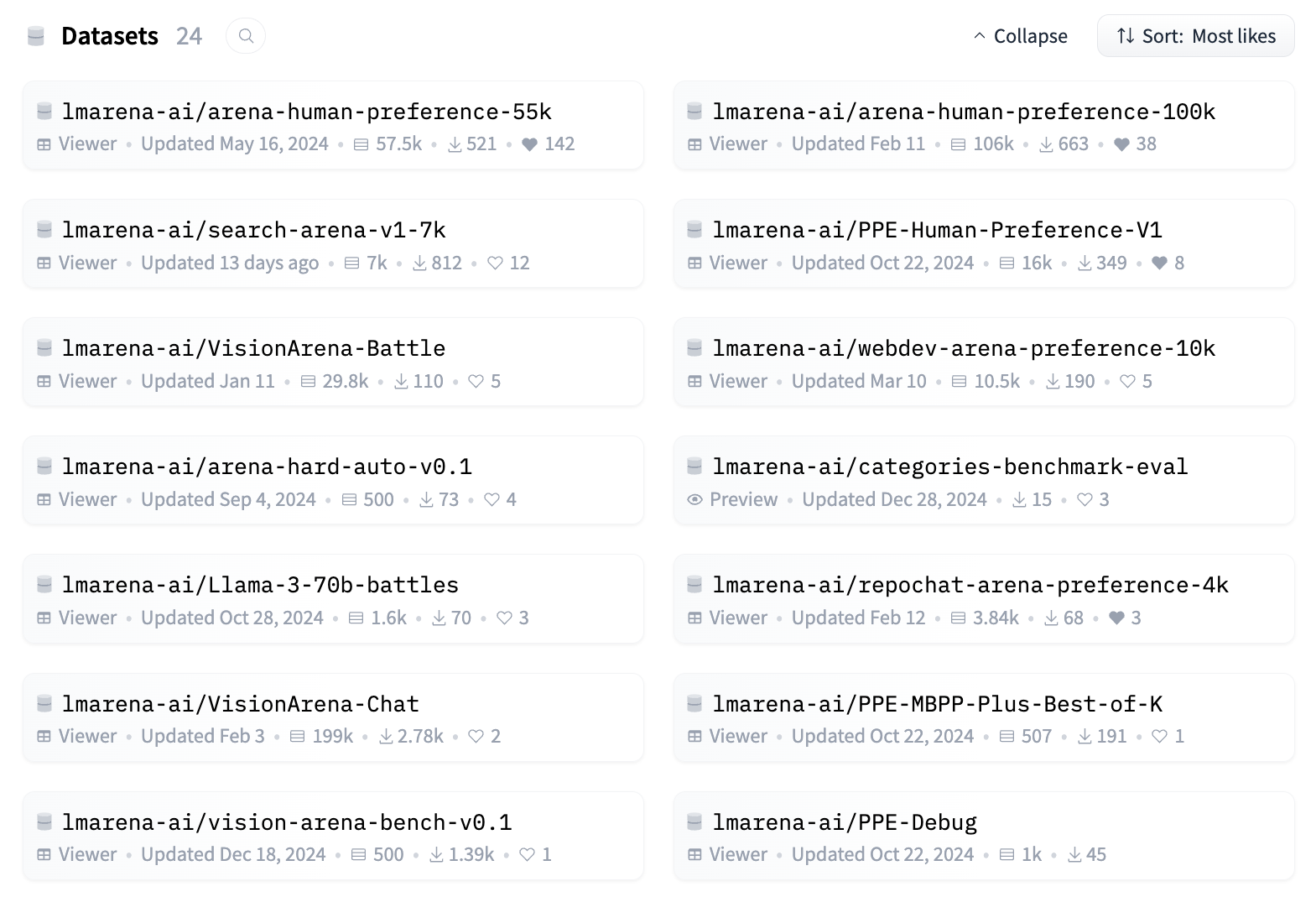

As part of our commitment to AI transparency, we’ve also released public datasets, shared evaluation methodology and published papers to help everyone in the ecosystem learn and build better AI. We also share a percentage of the collected data back with model providers to help them improve their models from our community’s perspective. Check out more of our ongoing research on our blog.

Where We are Headed Next

LMArena started as a scrappy academic project from UC Berkeley: just a handful of PhD students and undergrads working day and night on a research prototype. But since then, we’ve announced we’re going to become a company to scale up and accelerate all the community feedback we have received to date, and have recently raised $100M in seed funding, and launched a new UI experience for everyone. As a company, our mission remains the same: to bring the best AI models to everyone, and improve them through real-world community evaluations. Our vision is to create an open space to try all the best AIs and shape their future through collective feedback.

We know AI companies want access to neutral and reliable evaluation services to speed up model development and improve real-world performance. We believe:

- Evaluation should be open to everyone, not just done in labs.

- That people’s preferences are critical to understanding the best models.

- Rigorous science, transparency, fairness, and participation are the best way to make AI better, for everyone.

Whether you’re a researcher, builder, or just curious about AI, join our community!

- Join the team: lmarena.ai/jobs

- Join the conversation: discord.gg/LMArena

- Follow us on X: @lmarena_ai