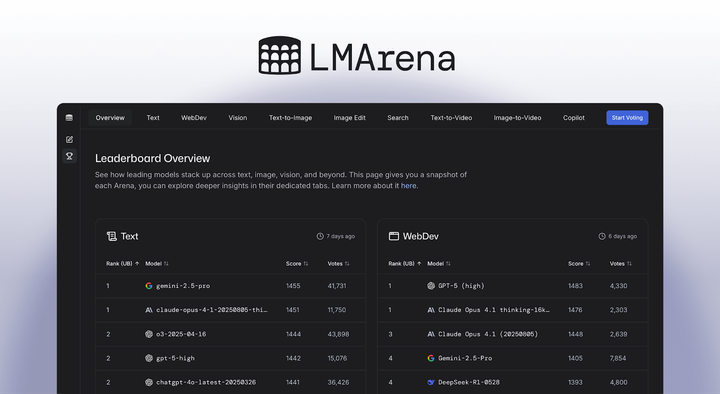

LMArena's Ranking Method

Since launching the platform, developing a rigorous and scientifically grounded evaluation methodology has been central to our mission. A key component of this effort is providing proper statistical uncertainty quantification for model scores and rankings. To that end, we have always reported confidence intervals alongside Arena scores and surfaced any